You are here

About

The development of robotic assistants is being held back by the lack of a coherent and credible safety framework. Consequently, robotic assistant applications are confined either to research labs or, in practice, to scenarios where physical interaction with humans is purposely limited, e.g. surveillance, transport or entertainment (e.g. museums).

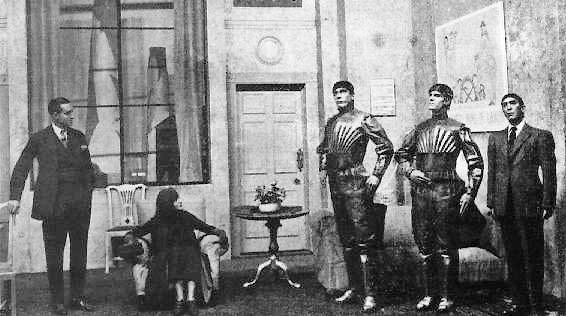

Rossum's Universal Robots: the 1920 play which introduced the word "robot" into the English language. Humans are on the left, robots are on the right.

In the domestic/personal domain, however, interactions take place in an informal, unstructured, and typically highly complex way. Even in more constrained industrial settings, the need for reduced manufacturing costs is motivating the creation of robots capable of much greater flexibility and intelligence. These robots need to work near to, be taught by, and perhaps even interact physically with, human co-workers.

So, how can we enhance robots so that they can participate in sophisticated interactions with humans in a safe and trustworthy manner? This is a fundamental research question that must be addressed before the traditional physical safety barrier between the robot and the human can be removed, which is essential for close-proximity human-robot interactions. How, then, might we establish such safety arguments? Intrinsically safe robots must incorporate safety at all levels (mechanical; control; and human interaction). There has been some work on safety at lower, mechanical, levels to severely restrict movements near humans, without regard to whether the movements are "safe" or not. Crucially, no one has yet tackled the high-level behaviours of robotic assistants during interaction with humans, i.e. not only whether the robot makes safe moves, but whether it knowingly or deliberately makes unsafe moves. This is the focus of our project.

Formal verification exhaustively analyses all of the robot's possible choices, but uses a vastly simplified environmental model. Simulation-based testing of robot-human interactions can be carried out in a fast, directed way and involves a much more realistic environmental model, but is essentially selective and does not take into account true human interaction. Formative user evaluation provides exactly this validation, constructing a comprehensive analysis from the human participant's point of view.

It is the aim of our project to bring these three approaches together to tackle the holistic analysis of safety in human-robot interactions. This will require significant research in enhancing each of the, very distinct, approaches so they can work together and subsequently be applied in realistic human-robot scenarios. This has not previously been achieved. Developing strong links between the techniques, for example through formal assertions and interaction hypotheses, together with extension of the basic techniques to cope with practical robotics, is the core part of our research.

Though non-trivial to achieve, this combined approach will be very powerful. Not only will analysis from one technique stimulate new explorations for the others, but each distinct technique actually remedies some of the deficiencies of another. Thus, this combination provides a new, strong, comprehensive, end-to-end verification and validation method for assessing safety in human-robot interactions.